Hello :) Today is Day 178!

A quick summary of today:

- started my own data engineering project

- GCP as cloud storage solution

- terraform for infrastructure management

- mage for orchestration

- dbt for modelling

- maybe more to come

The last bit of Module 4 was about creating a spark cluster in GCP - using Dataproc

We create a cluster, which creates a VM for it.

On that cluster we can add PySpark (or other types like Spark) jobs where we can submit python scripts (that use PySpark) that we wrote. And we can run them on the cloud

And because this cluster is created on GCP, it can directly read data from GCS and also write data to it.

Now … onto the Lending club data pipeline project

It is still early stages but, I had an idea in mind how to combine the different tools I learned from the DataTalksClub data engineering camp. I am taking inspiration from the data engineering zoomcamp where full documentation is encouraged so I will try to create nice graphs, visualisations and explanations when the project is near completion. Also I decided to use some structured commit messages - [feature], [pretty] for renamings and similar, [delete] for files, maybe more will be needed.

What data to use?

I have had the lending club dataset in my mind for a while. But I wanted to get a smaller version so it is not so heavy on my machine for dev, but also to keep on GCS. So I ended up with this smaller version from Kaggle.

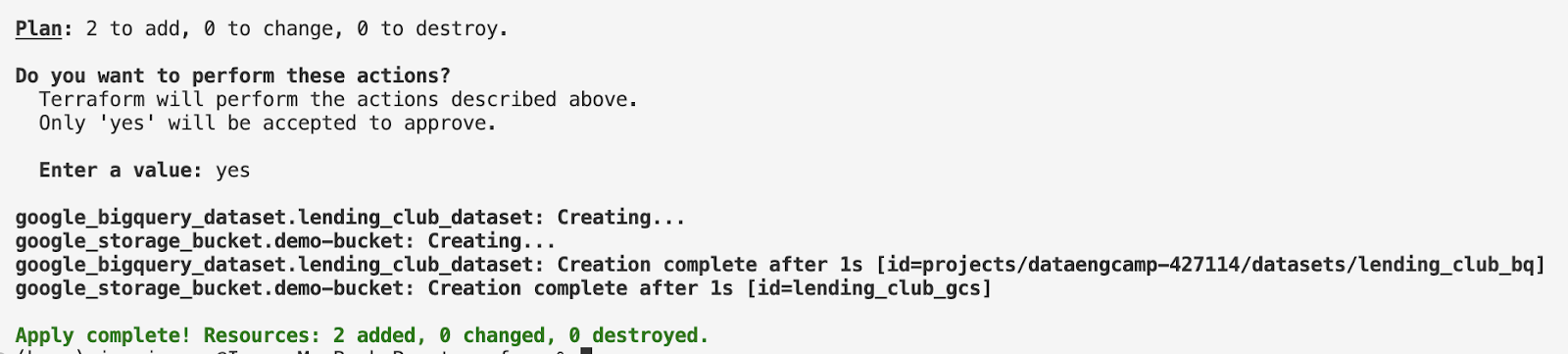

First, I started off with terraform and creating resources on GCP

I have only two terraform files - main.tf and variables.tf - here in my repo. As I am writing this before finishing for today, I realise that creating these resources is a bit manual - running terraform init/plan/apply - so I wonder how can I automate them.

Next, I initialised my good ol’ pal mage.ai

The above pipeline (called ‘data_raw_to_gcs’)

- first loads data using Kaggle’s API. I had to set up my kaggle credentials for this too.

- next it checks basic data types (str and float). I also wrote some basic tests like making sure we get the same columns. To be honest, maybe these two ‘data loader’ blocks can become one.

- the 3rd block does some basic transformation - like converting date columns to the right format

- the final block, connects to GCS and uploads the final raw file version as a parquet file

All the block’s code can be found here. I spent a bit of time here, configuring connections, and thinking how to design this. I was thinking about the structure of my project. Is it better

- to do this splitting into dimension tables in dbt - so that most of the data transformations are kept in place (dbt) + data tests, documentating, or

- should I split the big dataset into dimension tables in mage which are then loaded to GCS. And in dbt, I do data modelling - adding tests, documentation, creating fact table.

I feel like the 1st one is better because all the transformations I am planning to do can be done using sql, and if I do them in python, dbt kind of loses a bit of its value. So I decided to go with 1 just so that I can get a bit more practice with data modelling in dbt, but also chatGPT seems to agree that by doing 1, I am separating the ELT process better.

Then I created data in BigQuery using the uploaded file from GCS

I did this manually, and I am realising it as I started to write this blog. The bit I did manually was bring data from GCS to BigQuery and then partitioning and clustering it. A mage pipeline might be a better fit for this task.

Then, I went to set up dbt cloud

I managed to connect my repo to dbt cloud and initialise the project. Also added packages that I will use (dbt utils), and just basic cleaning operations. Code is here. Before I do anything, I need to figure out my data modelling architecture - fact and dimenstion tables. I learned about those concepts on Day 170, so I will refer to then when making my plan.

For tomorrow I will think about the data modelling structure, and automating more of the manual bits I did today.

That is all for today!

See you tomorrow :)