Hello :) Today is Day 176!

A quick summary of today:

- finished Module 4: analysis engineering and using dbt from the data engineering zoomcamp

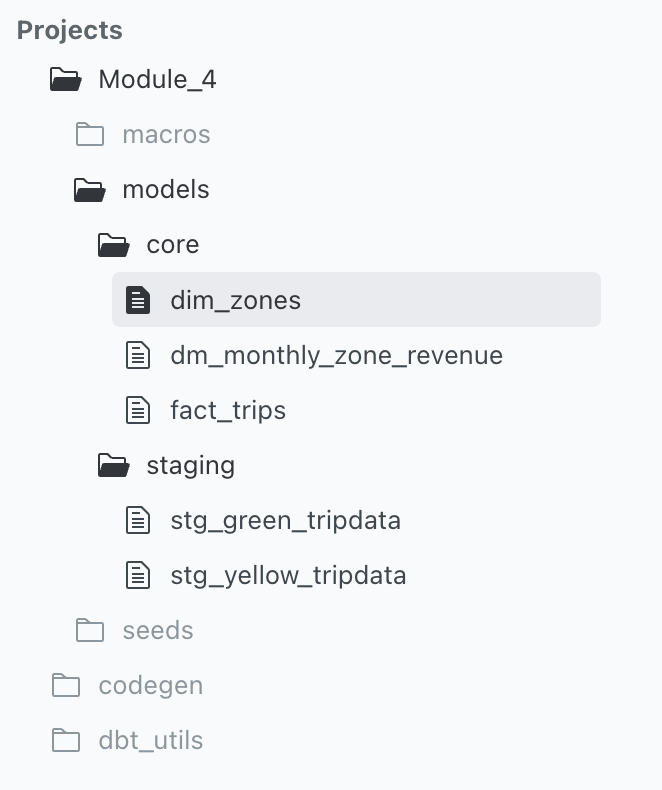

A preview of what I created in the end

Continuing from yesterday with dbt ~

First I learned about testing and documenting dbt models

We need to make sure the data we deliver to the end user is correct, and how do we make sure that we are not building our dbt models on top of incorrect data?

dbt tests

- assumptions that we make about our data

- tests in dbt are essentially a select sql query

- these assumptions get compliled to sql that returns the amount of failing records

- tests are defined on a column in the .yml file

- dbt provides basic tests to check if the column values are:

- unique, not null, accepted values, a foreign key to another table

- we can create custom tests as queries

Before writing tests, to ensure our data’s schema is correct we can autogenerate it using a package

First, include the package in packages.yml (and run dbt deps to install it)

Then, we can use a macro the auto-generate schemas for our data

And when we compile the above code, we get (image is truncated)

Which we add to the schema.yml which keeps info about our data. These schema.yml files are not needed to run the models, but are recommended because it serves as documentation.

Now, adding tests is fairly straighforward

For example, we can add tests to check that the column tripid is unique and not null

In addition to severity, we can add thresholds as well. Another test to check that pickup_locationid and dropoff_locationid are within the existing locationids in a reference table

Also added a test to check that payment_type is within certain values

We use a variable here, which is defined in the dbt_project.yml and is a list of [1,2,3,4,5,6]. It is just an example of how we can use variables, because the same check needs to be done for green_tripdata (the above tests are for columns in the yellow_tripdata table).

And when we run dbt build now

Next, learning about documentation

If we check the pics from above, there is a description: "" field, which is part of dbt documentation.

dbt provides a way to generate documentation for our dbt project and render it as a website. The documentation includes:

- Information about the project

- Model code

- Model dependencies

- Sources

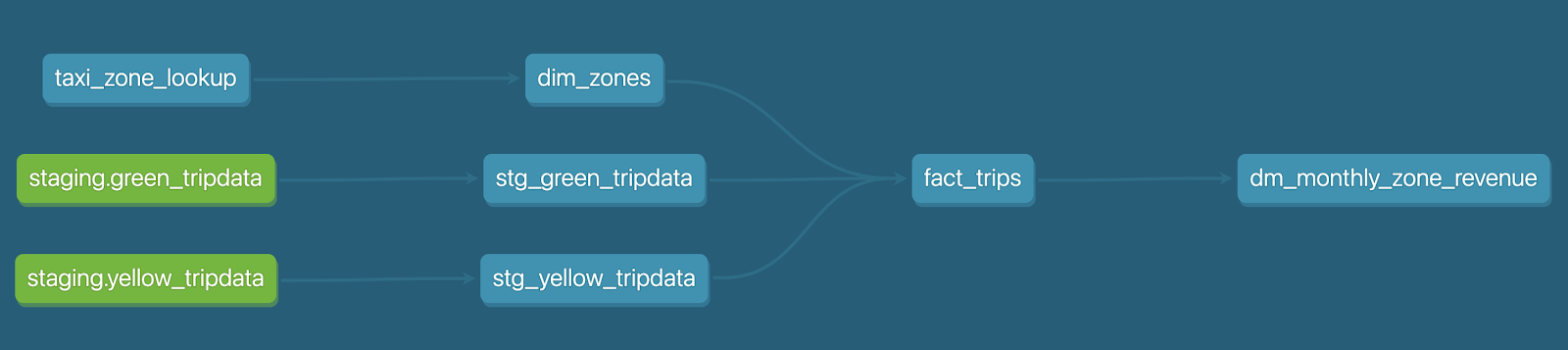

- Auto-generated DAG from the

refandsourcemacros - Descriptions (from

.ymlfile) and tests - Information about our data warehouse (

information_schema) - Column names and data types

- Table statistics like size and rows

dbt docs can also be hosted in dbt Cloud.

Once we are happy with the descriptions in our schema.yml files

for example:

We can run dbt docs generate

And we get a docs page

The structure of folders is the same

And for example in dim_zones we can see

There is a database tab where we can look at documentation related to data in BigQuery

Also in the bottom right corner there is a button that shows us the lineage of our project

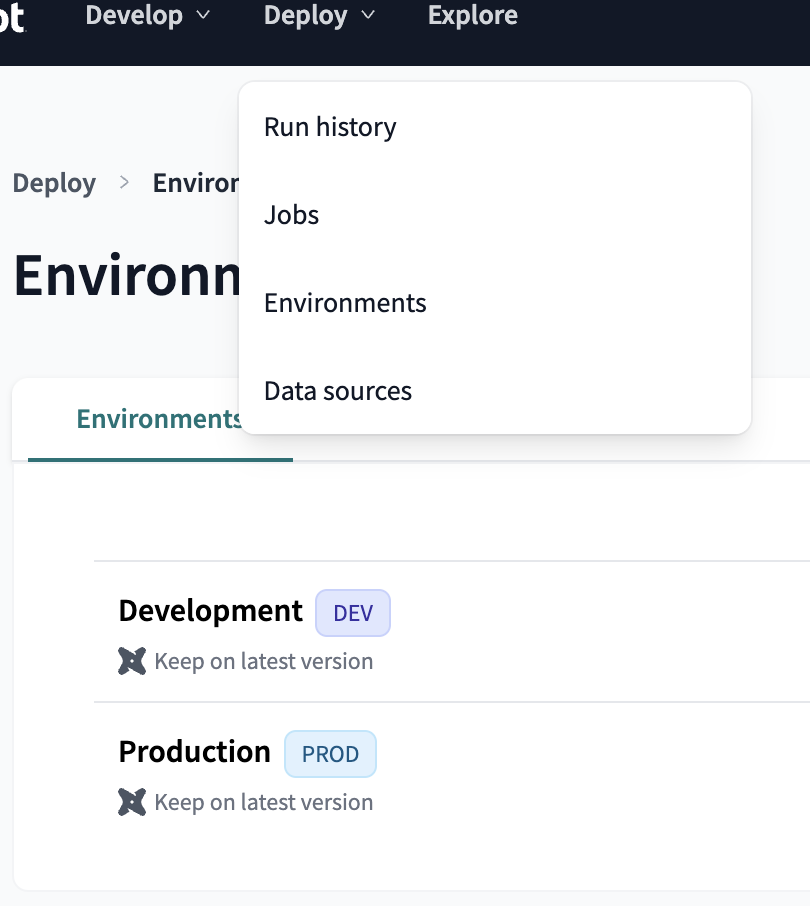

Next, learning about deployment using dbt cloud

To deploy we need to create a PROD environment

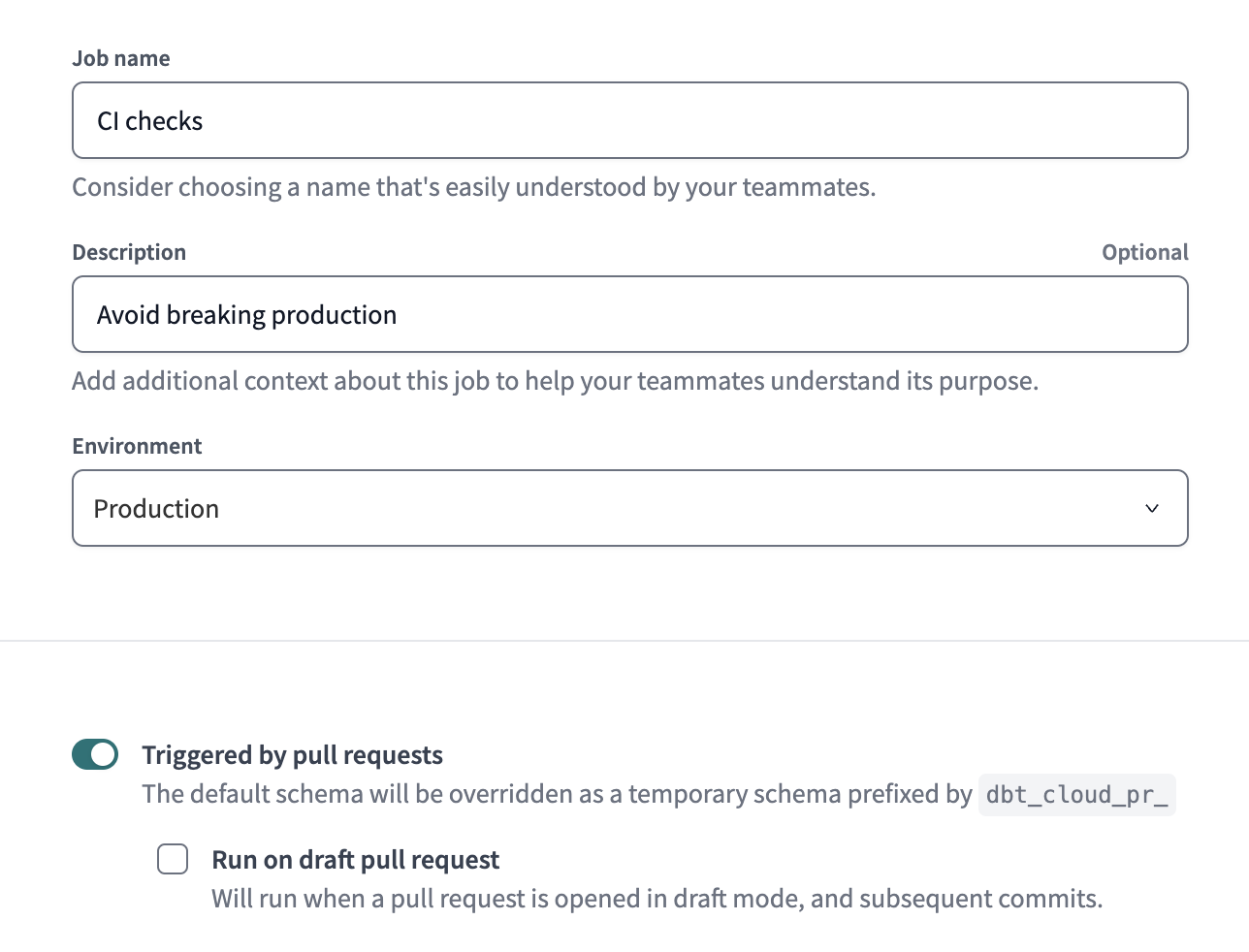

In there, we can create jobs

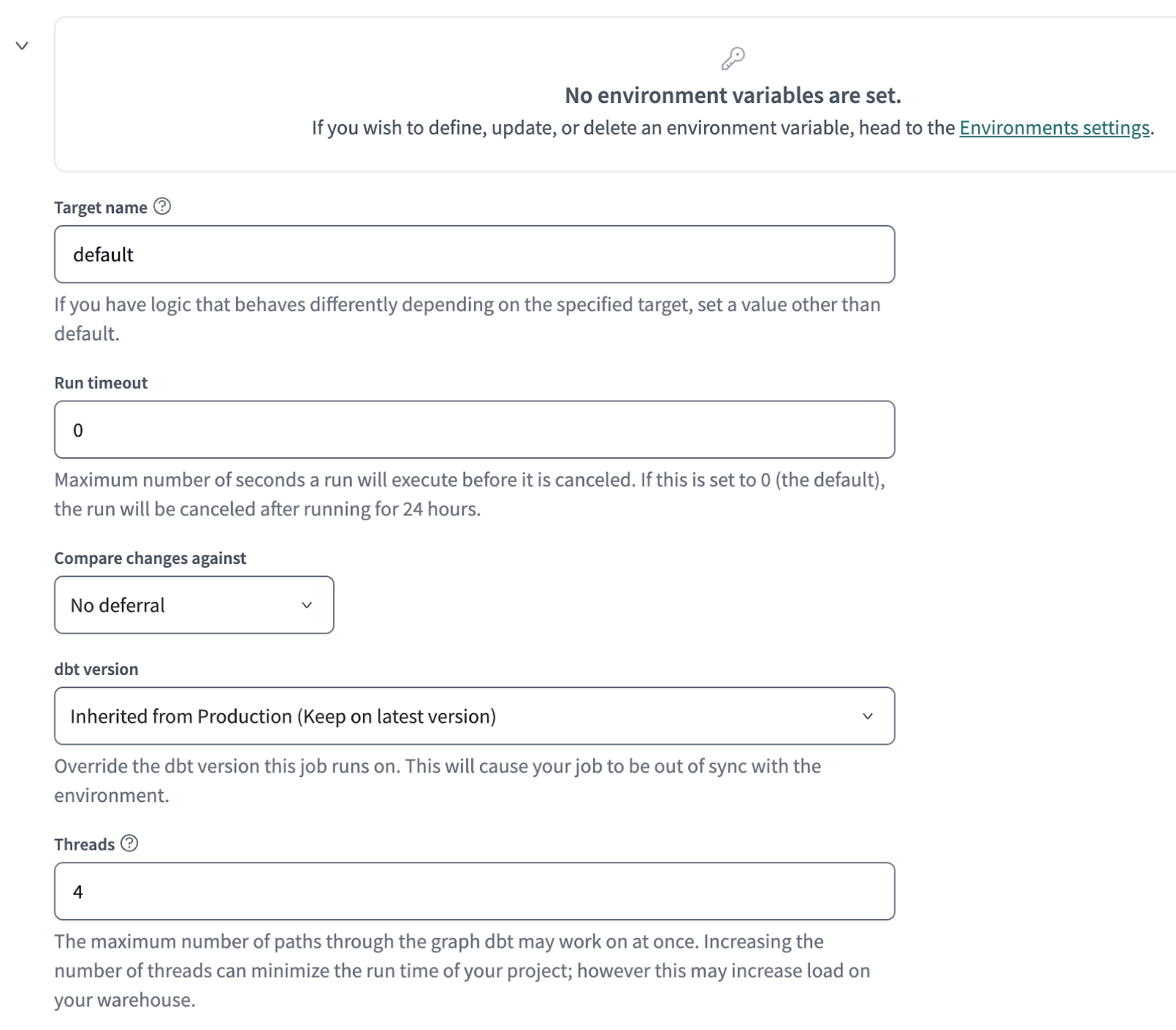

Where we have a Job settings section

Execution settings section

Triggers

And advanced settings section

Once created, we see: (there is a next run because I set it to run automatically every friday midday UTC)

And we can trigger a run via API as well. I pressed Run now and I see a new run:

We can see details by clicking on it (the warning is about a deprecated functionality)

This also generates a hosted documentation.

Going back to creating jobs ~ we can also create CI jobs There is a git trigger on pull request

There is an option to list commands where we can run whatever we want. And also a field to select with which env to compare the run

A final task - visualising data with Google Data Studio

Create a new report, make a connection to BigQuery and we can see the fields of the selected table

After some playing around on Looker ~

All the code from today as in dbt + a pdf of the visualisation is on my repo.

That is all for today!

See you tomorrow :)